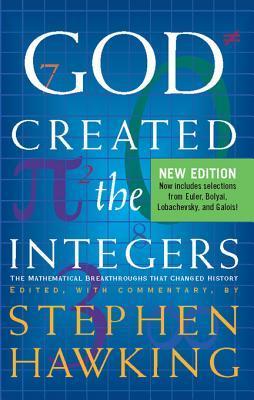

God Created The Integers: The Mathematical Breakthroughs that Changed History

Stephen Hawking

Publisher

Running Press Adult

Publication Date

3/29/2007

ISBN

9780762432721

Pages

1376

Categories

About the Author

Stephen Hawking

Questions & Answers

The book "God Created the Integers: The Mathematical Breakthroughs That Changed History" highlights several key mathematical breakthroughs that have shaped history:

- Euclid's Elements: This foundational work established rigorous geometry, including the Pythagorean theorem.

- Archimedes' Method: His method of exhaustion allowed for precise calculations of geometric shapes and volume, including the approximation of π.

- Diophantus of Alexandria: Introduced algebraic symbolism, solving equations and laying the groundwork for algebra.

- Rene Descartes: Created analytic geometry, uniting algebra and geometry.

- Isaac Newton: Developed calculus, revolutionizing physics and the understanding of motion and gravity.

- Leonhard Euler: Made significant contributions to number theory, series, and graph theory, including the Euler's formula.

- Pierre Simon Laplace: Pioneered probability theory and statistical methods.

- Georg Friedrich Bernhard Riemann: Advanced the theory of complex functions and introduced the Riemann hypothesis.

- Kurt Gödel and Alan Turing: Addressed the limits of mathematical knowledge, with Gödel's incompleteness theorems and Turing's work on computability.

- Henri Poincaré: Made significant contributions to topology and dynamical systems.

These breakthroughs have not only advanced mathematics but also influenced other scientific disciplines, technology, and our understanding of the universe.

The contributions of individual mathematicians like Euclid, Archimedes, Diophantus, and others reflect the evolution of mathematical thought over centuries through their advancements in various mathematical fields. Euclid's "Elements" laid the foundation for geometry with rigorous proofs, emphasizing the importance of principles and rigor. Archimedes' work in geometry and mechanics, including the method of exhaustion, demonstrated the power of approximation and the concept of limits. Diophantus introduced algebraic symbolism and solved equations, marking the beginning of algebra. Descartes' analytic geometry unified algebra and geometry, paving the way for calculus. Newton's Principia introduced calculus and the laws of motion, revolutionizing physics. Euler's work in number theory and analysis expanded the scope of mathematics. Gauss's Disquisitiones Arithmeticae unified number theory, and Cauchy's work on calculus provided a rigorous foundation. Lobachevsky and Bolyai's non-Euclidean geometry challenged the parallel postulate. Finally, Weierstrass, Dedekind, Cantor, Lebesgue, Gödel, and Turing contributed to the development of analysis, set theory, and logic, shaping modern mathematics. These contributions illustrate the continuous evolution of mathematical thought, from geometry and algebra to analysis, logic, and beyond.

The book illustrates the profound connections between mathematical discoveries and their applications in the physical sciences. Euclid's geometry, for instance, laid the foundation for understanding geometric shapes and properties, which Archimedes applied to calculate areas and volumes, including approximations for π. René Descartes' analytic geometry unified geometry and algebra, enabling Isaac Newton to develop calculus and revolutionize science. Euler's work on series and the seven bridges of Konigsberg contributed to graph theory and the understanding of networks. Fourier's heat equation and Riemann's work on complex analysis and geometry advanced physics and mathematics. Gauss's number theory and Cauchy's work on complex analysis were crucial for understanding electromagnetism and quantum mechanics. Gödel and Turing's contributions to logic and computability theory have implications for artificial intelligence and computer science. These examples show that mathematical discoveries often lead to new physical insights, and vice versa, creating a cycle of innovation.

Mathematical concepts like infinity, continuity, and logic have profoundly influenced our worldview and technological development. Infinity, for instance, has allowed us to conceptualize the vastness of the universe and the limits of human knowledge, fostering a sense of awe and curiosity. Continuity, crucial in calculus, has enabled precise calculations in physics, engineering, and computer science, leading to advancements in technology like the development of computers and the understanding of complex systems.

Logic, the foundation of mathematics, has shaped our reasoning and problem-solving abilities. It has been instrumental in the creation of algorithms, which are the backbone of modern computing and artificial intelligence. The rigorous application of logic in mathematics has also led to the development of formal systems, which are essential in cryptography, ensuring secure communication and data protection.

In summary, mathematical concepts have not only expanded our understanding of the world but have also been pivotal in driving technological innovation and shaping our modern lifestyle.

Gödel's incompleteness theorem and Turing's work on computability have profound philosophical implications. Gödel's theorem demonstrates that within any sufficiently complex logical system, there will always be statements that cannot be proven or disproven, challenging the idea of absolute truth and completeness. This challenges the foundational beliefs of mathematics and logic, suggesting that our understanding of the universe may be inherently incomplete.

Turing's work on computability, particularly his concept of Turing machines, provides a formal definition of what it means for a number or function to be computable. This has implications for the philosophy of mind, as it raises questions about the nature of intelligence and whether human thought can be fully captured by machines. Turing's proof that there are uncomputable numbers suggests that there may be limits to what machines can do, even in the realm of computation.

Together, these ideas suggest that our quest for knowledge is inherently limited by the nature of our logical systems and the physical world. They challenge the Enlightenment ideal of progress and the belief that human reason can fully understand the universe, leading to a more nuanced view of human understanding and the limits of knowledge.